In this post i detail how to get a CePH quincy Cluster up and running. This is my standard goto script for setting up any CePH Cluster and then tweak it once set up.

Set hostname for all nodes

hostnamectl set-hostname cephadm.ceph.localUpdate hosts file with all servers in the cluster

127.0.0.1 localhost

1.1.1.1 cephadm cephadm.ceph.local

1.1.1.2 cephgw1 cephgw1.ceph.local

1.1.1.3 cephgw2 cephgw2.ceph.local

1.1.1.4 cephosd1 cephosd1.ceph.local

1.1.1.5 cephosd2 cephosd2.ceph.local

1.1.1.6 cephosd3 cephosd3.ceph.localInital Software

apt update

apt install -y $(tasksel --task-packages standard)

apt install -y wget gnupg python2-minimal snmpd ntp zip ifenslave lvm2 sudo curl nano ntp bmon mc htop

wget -q -O ~/cephkey.asc 'https://download.ceph.com/keys/release.asc'

apt-key add ~/cephkey.asc

touch /etc/apt/sources.list.d/ceph.list

echo "deb https://download.ceph.com/debian-quincy/ $(lsb_release -sc) main" > /etc/apt/sources.list.d/ceph.list

touch /etc/apt/sources.list.d/backport.list

echo "deb http://deb.debian.org/debian buster-backports main" >> /etc/apt/sources.list.d/backport.listInstall CephADM

apt update

apt-get -t buster-backports install -y "smartmontools"

apt install -y cephadm ceph ceph-common ceph-base ceph-volume

apt updateCreate local SSH Key

ssh-keygen -b 2048 -t rsa -f ~/.ssh/id_rsa -q -N ''Get all Node Keys and place in to known_hosts

ssh-keyscan [All_Nodes] >> ~/.ssh/known_hostsCopy local SSH Keys to all Nodes

ssh-copy-id root@[All_Nodes]Initally Set up all Nodes

ssh root@[All_Nodes] hostnamectl set-hostname [All_Nodes].ceph.local

rm -rf /etc/ceph/cephkey.asc

rm -rf /etc/ceph/backport.list

rm -rf /etc/ceph/ceph.list

touch /etc/ceph/backport.list

touch /etc/ceph/ceph.list

ssh root@[ALL_NOdes] apt install -y $(tasksel --task-packages standard)

wget -q -O /etc/ceph/cephkey.asc 'https://download.ceph.com/keys/release.asc'

echo "deb https://download.ceph.com/debian-quincy/ bullseye main" > /etc/ceph/ceph.list

echo "deb http://deb.debian.org/debian buster-backports main" > /etc/ceph/backport.list

scp /etc/ceph/backport.list root@[All_Nodes]:/etc/apt/sources.list.d/backport.list

ssh root@[All_Nodes] apt update

ssh root@[All_Nodes] apt install -y snmpd lvm2 sudo curl nano zip gnupg ntp bmon mc htop

scp /etc/ceph/ceph.list root@[All_Nodes]:/etc/apt/sources.list.d/ceph.list

scp /etc/ceph/cephkey.asc root@[All_Nodes]:/opt/cephkey.asc

ssh root@[All_Nodes] apt-key add /opt/cephkey.asc

ssh root@[All_Nodes] apt update

ssh root@[All_Nodes] apt-get -t buster-backports install -y "smartmontools"

ssh root@[All_Nodes] apt install -y cephadm ceph-volume ceph-commonCreate Inital Monitor nodes

cephadm bootstrap --mon-ip [Local_IP] --cluster-network [Ceph_Cluster_Network_Range] --allow-overwrite --allow-fqdn-hostnameCopy Ceph Public Keys

ssh-copy-id -f -i /etc/ceph/ceph.pub root@[All_Nodes]Config OSD Nodes

ceph orch host add [cephosdX].ceph.local [IP_Address] --labels=osd_node,osdAdd all OSD drives

ceph orch apply osd --all-available-devicesConfigure Gateway Nodes

ceph orch host add [cephgwX].ceph.local [IP_Address] --labels=gateway,gw,rgw,nfsgw,iscsigw

ssh root@[cephgwX] apt install -y tcmu-runner ceph-iscsi targetcli-fb python3-rtslib-fb

ceph fs volume create ceph_fs_nfs_01

ceph fs subvolumegroup create ceph_fs_nfs_01 volgroup_nfs_01

ceph osd pool create cephfs_data

ceph osd pool create cephfs_metadata

ceph nfs cluster create Inital_nfsshare [cephgwX].ceph.local

ceph nfs export create cephfs Inital_nfsshare /inital_nfs_mount ceph_fs_nfs_01Create iSCSI Config File

touch /etc/ceph/iscsi.cfg

echo "[config]" >> /etc/ceph/iscsi.cfg

echo "cluster_name = ceph" >> /etc/ceph/iscsi.cfg

echo "gateway_keyring = ceph.client.admin.keyring" >> /etc/ceph/iscsi.cfg

echo "api_secure = false" >> /etc/ceph/iscsi.cfg

echo "api_user = admin" >> /etc/ceph/iscsi.cfg

echo "api_password = 5gt2M" >> /etc/ceph/iscsi.cfg

echo "api_port = 5000" >> /etc/ceph/iscsi.cfg

echo "trusted_ip_list = [ALL_NODE_IPs]" >> /etc/ceph/iscsi.cfg

echo "loop_delay = 1" >> /etc/ceph/iscsi.cfg

echo "pool = rbd" >> /etc/ceph/iscsi.cfgRun below to set up iSCSI Gateways

ceph osd blacklist clear

ceph osd pool create rbd

ceph osd pool application enable rbd rbd

ceph osd pool create inital_iscsi

ceph osd pool application enable inital_iscsi rbd

scp /etc/ceph/iscsi.cfg root@[cephgwX]:/etc/ceph/iscsi-gateway.cfg

ceph dashboard set-iscsi-api-ssl-verification false

ssh root@[cephgwX] systemctl daemon-reload

ssh root@[cephgwX] systemctl enable rbd-target-gw

ssh root@[cephgwX] systemctl start rbd-target-gw

ssh root@[cephgwX] systemctl status rbd-target-gw

ssh root@[cephgwX] systemctl enable rbd-target-api

ceph osd blacklist clear

ssh root@[cephgwX] systemctl start rbd-target-api

ssh root@[cephgwX] systemctl status rbd-target-api

echo "http://admin:[SecurePassword]@[cephgwX_IP_Address]:5000" > /etc/ceph/[cephgwX].iscsi

ceph osd blacklist clear

ceph dashboard iscsi-gateway-add -i /etc/ceph/[cephgwX].iscsiSet up Loki , Promtail , grafana , prometheus

ceph orch apply loki [cephadm].ceph.local

ceph orch apply promtail [cephadm].ceph.local

ceph orch apply grafana [cephadm].ceph.local

ceph orch apply prometheus [cephadm].ceph.local

ceph orch apply rgw Inital_rgw '--placement=label:rgw count-per-host:1' --port=8000Set up Dashboard

echo "[SecureDashboardPassword]" > /etc/ceph/passwd.pwd

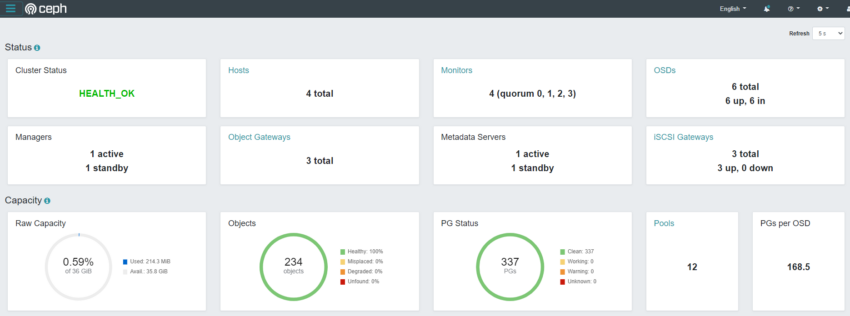

ceph dashboard ac-user-set-password admin -i /etc/ceph/passwd.pwd --force-passwordIf everything installed correctly. You can go to https://[IP_Address]:8443